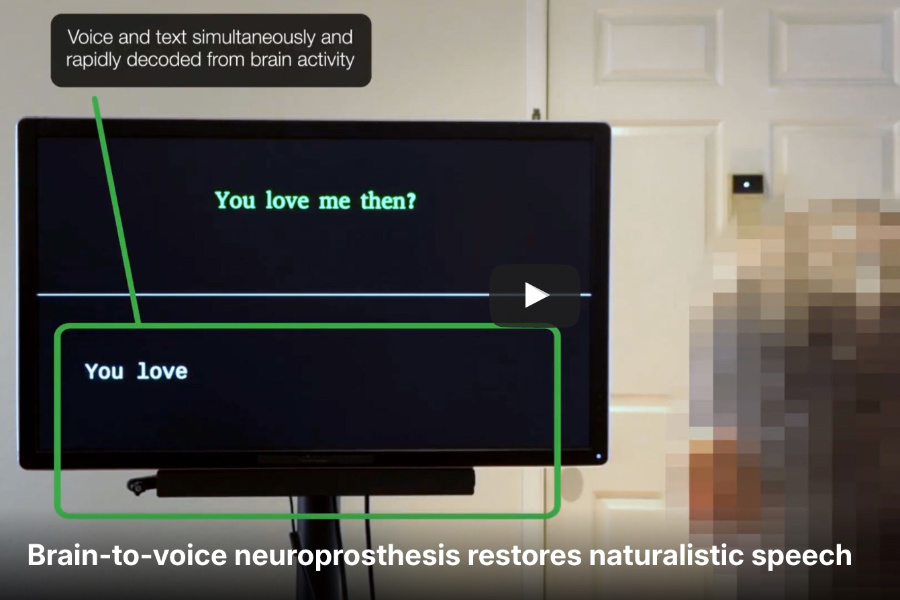

In a groundbreaking advancement, researchers from UC Berkeley and UC San Francisco have developed an AI-powered brain-computer interface that can translate brain signals into natural-sounding speech for people with severe paralysis.

Why it matters

Now, intelligible, naturalistic speech can be generated almost instantly as a person attempts to speak. This marks a critical step towards restoring fluent communication for those who have lost the ability to talk due to paralysis.

The challenge

Earlier brain-computer interfaces for speech suffered from long delays. It could take around 8 seconds to decode a single sentence from brain signals. This significant lag made communication difficult and unnatural.

The new neuroprosthesis overcomes the major hurdle of latency, the frustrating delay found in previous systems.

“This proof-of-concept framework is quite a breakthrough. We are optimistic that we can now make advances at every level. On the engineering side, for example, we will continue to push the algorithm to see how we can generate speech better and faster.” —Cheol Jun Cho, co-lead author, UC Berkeley Ph.D. student in electrical engineering and computer sciences

Researchers connect Ann's brain implant to the voice synthesizer computer. (Photo by Noah Berger)

Researchers connect Ann's brain implant to the voice synthesizer computer. (Photo by Noah Berger)

How it works

The new technology taps the neural activity of the motor cortex, the brain region that controls the muscles used for speaking.

- To train the AI, researchers worked with a participant named Ann. She looked at phrases on a screen and silently attempted to say them. Her brain activity was recorded during these attempts.

- Since Ann has no remaining vocal ability, the team used a pre-trained text-to-speech model to generate target audio. They mapped Ann's neural signals to this target audio, even incorporating samples of her pre-injury voice to personalize the synthesized output.

- The AI model learns this mapping and can then continuously decode brain activity into audible speech as Ann intends to speak, initiating the sound within about one second of her intent.

Click the image above and scroll to the video.

Click the image above and scroll to the video.

The big picture

This faster method matched the accuracy of previous, slower techniques. Notably, the AI could generate words it wasn't trained on, demonstrating its ability to understand and reproduce core speech patterns.

- Researchers confirmed the technique works with various brain-sensing technologies, including surface electrodes and penetrating arrays.

- Ann, the study participant, reported that hearing her voice in near-real time felt more under her control, increasing her sense of deep involvement.

- The teams are now working on faster speech generation and incorporating more tone and pitch into vocal expression.